CPU Caches

See Memory Hierarchy .

Caches data from main memory. There are also special Instruction Caches to cache memory containing instructions. See also: Cache Optimizations

Reasons to caching #

- Temporal locality

- (The same) addresses recenctly accessed will probably be accessed again in the future

- Spatial locality

- Addresses near recently accessed addresses will probably also be accessed in the near future

Implementations of caches #

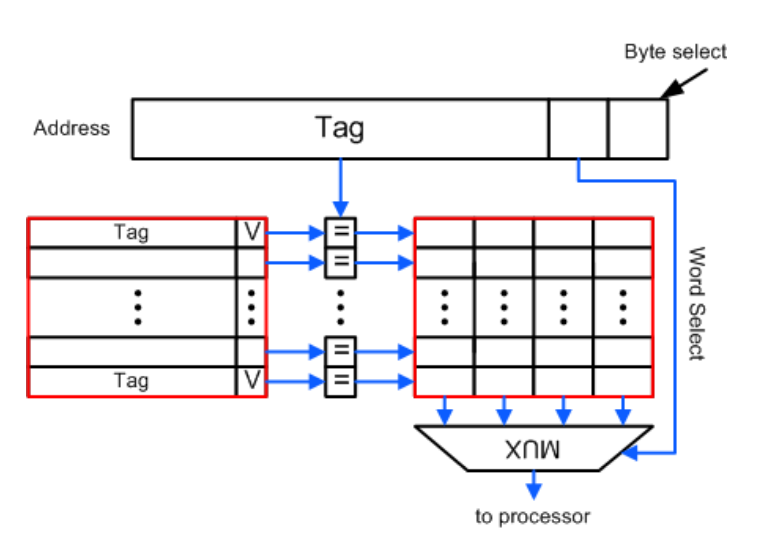

Fully assiciative cache #

- The address is split up in a “Tag” and word- and byte-select

- Each cacheline is able to store all memory lines (alignment applies of course)

- Each cacheline has to be checked for the tag in parallel -> expensive / hard / slow

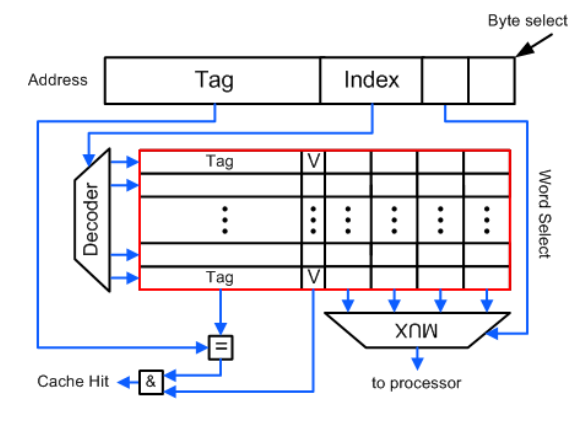

Direct mapped cache #

-

The address is split into Tag, index, word- and byte-select

-

A decoder determines the unique cacheline for that index -> Super fast access

-> if unlucky, accesses to frequent data keep colliding in the same cache line and cannot be held both in the cache

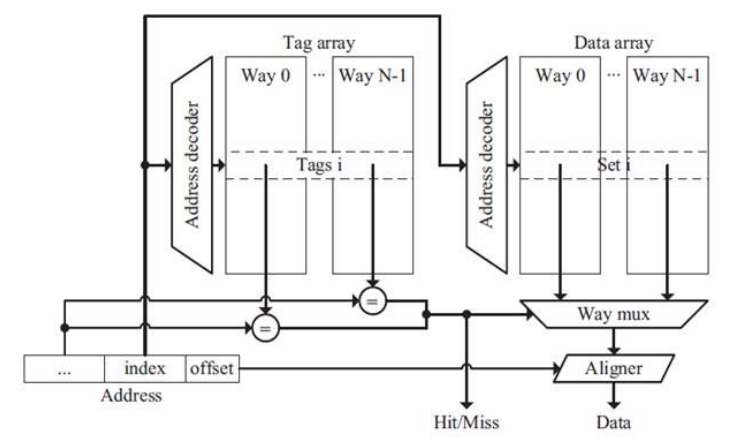

Set-associative cache #

- Have multiple direct mapped chaches that are associative to another, only limited number of cache lines have to be checked.

Cache update stategies #

Write through cache #

-

Every write to the cache is directly propagated to higher levels

-

higher bus bandwidth

-

On write miss:

- Write Allocation: Fetch first then write trough

- No Write allocation: no action in the cache

Write back cache #

- Modifications are stroed in the cache and only propagated to memory if cache line is evicted

- Uses dirty bit per cache line

- Reduce bus traffic

Cache Replacement stategies #

- Which cache line to evict?

- Random: .. yeah

- LRU: theoretically goof, but hard to implement in hardware

- FIFO: somehow based on temporal locality

Reasons of Cache Misses #

- Cold miss

- Access of data that was never in cache before (nothing can be done to prevent this)

- Capacity miss

- Memory block was in cache before but had to be evicted due to limited cache size (can be improved with bigger caches)

- Conflict miss

- Memory block was in cache before but had to be evicted due to conflict (like in a direct mapped cache)

Blocking vs Non-Blocking Cache #

A blocking cache stalls on a miss until the data arrives from higher level cache.

Non blocking caches can still serve memory accesses while another load is waiting for the data.

For non-blocking caches, additional components are necessary:

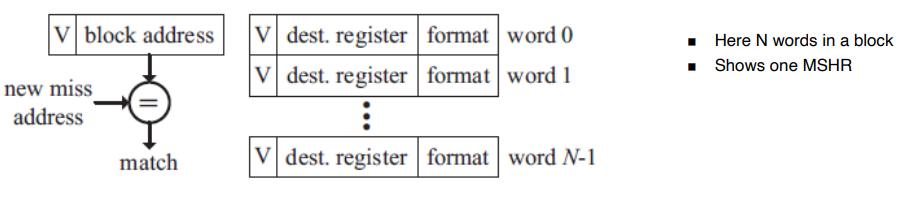

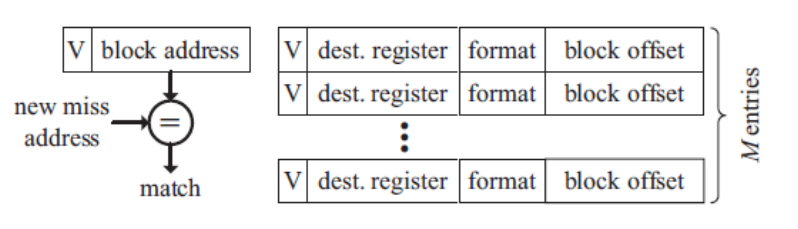

- Miss Status/information Holding Registers (MSHRs)

- Store information about pending misses.

- Fill Buffer

- Holds fetched data before they are written to the data array

Categories of Cache misses (for non blocking caches) #

- Primary miss

- the first time a miss occurrs to a memory block

- Secondary miss

- subsequent misses to the same memory block while the data is still being fetched

- Structural-stall miss

- a secondary miss that the availiable hardware resources cannot handle

MSHR: implicit #

- Each MSHR field stores the offset in the cache line

- multiple outstanding misses for the same word cannot be stored -> only one entry per address possible

MSHR: explicit #

- Each MSHR field stores the offset in the cache line

- multiple outstanding misses for the same word can be stored

MSHR: in-cache #

- Reuse the cache line to store info about pending misses

- Extra bit: “Transient bit” to distinguish from data in cache