Prefetching

Hardware prefetching #

-

predict of addresses that will be needed and load them in advance

-

Prefetch-On-Miss: fetch the missed block and also prefetch the next one

-

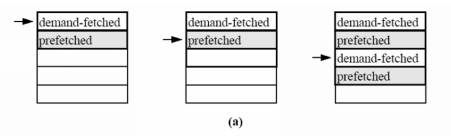

Tagged prefetch scheme: Tag == prefetched and not yet referenced

- on miss -> load memory block and set tag 0

- also prefetch next block and set tag 1

- on hit and tag 0: cache line was demanded or already referenced, do nothing

- on hit and tag 1: set this tag to 0 and prefetch next memory block and set tag 1

- on miss -> load memory block and set tag 0

-

Stream buffers

-

Hardware trying to detect stream memory access and prefetch lines for each detected steam. On cache miss, check the stream buffers and if one hits, transfer to cache

-

Stream buffers can detect strides

-

If hardware has stream buffers, to optimize for it you should distribute the loops such that you don’t exhaust all stream buffers

-

Evaluation #

pro:

- reduce missrate

- reduce miss penalty

con:

- wastes memroy bandwidth

- prefetched memory might evict other blocks too early

Software prefetching #

- non blocking

prefetchinstruction - Should ignore exceptions

- Requires non blocking caches

- Compiler can insert them

- Drawback:

- Additional instructions -> Reduces IPC